Contents

- 1 General

- 1.1 Are there any additional costs involved in using your software?

- 1.2 What servers do I need and why do I need them?

- 1.3 Why are there multiple ice servers / which one is used?

- 1.4 How to set up my own STUN / TURN server?

- 1.5 What does the signaling server actually do?

- 1.6 The node.js signalling server doesn’t work / prints the error message “Invalid message received: ” with some cryptic characters. How to fix this?

- 1.7 The signaling server fails to listen on the standard ports 80 / 443 for ws/ wss

- 1.8 What are those ssl.key / ssl.crt files coming with the signaling server / How to use secure connections?

- 1.9 Why can’t I connect (via the internet)?

- 1.10 How can I use the asset with other network libraries e.g. UNET or photon?

- 1.11 How to play ringtone before establishing a call? or: How to exchange information before a call?

- 1.12 Can I run the signaling server on one of the clients?

- 1.13 How do I run the signaling server on my webserver / apache / php webserver?

- 1.14 Can I use WebRTC Video Chat to broadcast my game screen / window?

- 1.15 How to send larger amounts of data or a whole file?

- 1.16 What is the maximum resolution, FPS?

- 1.17 Conferences / multiple users in a single video chat?

- 1.18 How many users can I have in a single conference call?

- 1.19 How to set the resolution?

- 1.20 How do I change the microphone / speaker (Windows)?

- 1.21 Why is the video so grainy / pixelated?

- 1.22 What codecs are used?

- 1.23 What image format is used?

- 1.24 Can I send data / stream between different platforms?

- 1.25 Can I connect the asset to another WebRTC application / library?

- 1.26 Can I stream the unity screen?

- 1.27 Can I send files?

- 1.28 Where is the AudioSource / How can I access the raw PCM data?

- 1.29 How can I record audio?

- 1.30 How can I send audio from other audio sources?

- 1.31 How to I switch cameras during the call?

- 1.32 The ConnectionId is not unique. How can I get a global ID for each user?

- 2 Platform specific issues

General

Are there any additional costs involved in using your software?

There are no fees you need to pay! But you do need to get your own server as I can’t guarantee that my test servers are always running. Check out the questions about server support for more Information.

What servers do I need and why do I need them?

WebRTC connects users directly to each other thus allowing you to reduce server load. But you still need a server / multiple server applications to initialize the connection between the users.

Signaling server: This is a node.js server developed by me. It is using (secure) websockets to allow two users to find each other online using any kind of string as an address. For example if user B wants to connect to user A then user A needs to request an address from this server. User B can use that address to initialize a connection to User A.

The java script source code is part of the WebRTC Network and Video Chat package (see server.zip + readme.txt!). I also provide a test server which is set up by default.

STUN server: One of the two kinds of ice server. This server is part of the native WebRTC implementation and is needed to establish connections through a router / NAT. As most users are behind a router / NAT you will generally need it for any connections that aren’t inside your own LAN or WIFI. Currently, the library uses the server “stun:stun.because-why-not.com:443” but there are free alternatives available for example googles public stun server “stun:stun.l.google.com:19302”. Note that this server isn’t available in China. TURN servers such as the application “coturn” support STUN as well. So you won’t need two different server applications if you plan to set up your own.

TURN server: Second kind of ice server. If the STUN server fails to connect two peers directly to each other a TURN server can be used to forward messages between two users. A TURN server is not provided as it might cause a lot of traffic. This means that using the default configuration some users might not be able to connect if their router / NAT configuration doesn’t allow direct connections.

Please send me an e-mail with your Unity Asset Store invoice number if you need a TURN server for testing purposes.

A TURN server often supports STUN as well. In this case you only need one. TURN will only be used if STUN failed.

General guidelines:

- If you are developing / testing only or work on a hobby project: Use the provided test servers

- If your app runs in a local network without internet: Set up a signalling server within the LAN, no STUN or TURN needed

- If your app should connect via internet at low cost: Set up a signalling server + use a free STUN server or set-up your own STUN server if you want to make sure it is always available. Some users might not be able to connect without TURN but even many AAA games still use this configuration.

- If you want to make sure everyone can connect or your application will often be used in businesses (they often block direct connections): Set up a signalling server + one or multiple TURN servers.

Why are there multiple ice servers / which one is used?

WebRTC uses 3 different ways of establishing a connection

- host – a connection that connects directly to the other side using the local available IPs

- srflx – a connection using STUN to avoid problems with router / NAT that might be in between to two systems trying to connect

- relay – using TURN. This is the only type that uses an external server to relay data. This only makes sense if the two other ways failed

WebRTC / ICE has an internal process to determine which one is best to be used using a priority system (RFC5245) . Usually, this means direct host connections will be tried first, then stun, then turn. It will also prefer udp over tcp. The detail of the process is left to the internals of WebRTC and isn’t determined by the asset. It actually changes regularly during WebRTC updates.

How to set up my own STUN / TURN server?

The project coturn is a great solution! It acts as a TURN and STUN server. Check out the INSTALL and readme files of the project to get started.

After installation the configuration is usually done via the file “/etc/turnserver.conf”. For testing with webrtc make sure to set at least the properties “user=myusername:mypassword” and “realm=mydomain.com”. You can also use a custom port using “listening-port=12779”. If you use cloud services (like azure) then your server might be behind a NAT/Firewall. In this case you also need to set the property “external-ip”.

After the configuration is done you can use your server using the credentials and the url “turn:mydomain.com:12779”.

To test if your server works properly with webrtc you can use this tool trickle-ice. The results should look like this:

The important lines are of the type “strflx” and “relay”. “srflx” is the systems public IP detected by STUN allowing direct connections trough firewall/NAT. And relay is a IP/ port provided by TURN allowing indirect connections via the server. The type “host” stands for possible direct connections in your own LAN without the help of TURN/STUN!

What does the signaling server actually do?

For WebRTC peers to connect they need to “find” each other online and exchange connection information first. For this the signaling server allows the following:

- It gives an endpoint an address that can be used to receive connections or fail if the address used already. (e.g. used by IBasicNetwork.StartServer and ICall.Listen )

- It allows other users to connect to these addresses or fail if address isn’t used (IBasicNetwork.Connect and ICall.Call)

- Once connected it gives each side a ConnectionID and will forward messages to the other side using these ID’s

- It can release an address to stop incoming connections (IBasicNetwork.StopServer). Existing connections stay valid.

- It also detects disconnects and will inform the other sides if needed + free any unused addresses

Additionally, it also has a special mode “address_sharing“. This mode allows to connect all users calling IBasicNetwork.Listen to each other in an n x n full mesh topology.

Note that if using ICall with the default settings the signaling server connections are cut automatically once direct WebRTC PeerConnections are established (except conferences). If using IBasicNetwork / IMediaNetwork this can be done manually by using the “StopServer” call.

The node.js signalling server doesn’t work / prints the error message “Invalid message received: ” with some cryptic characters. How to fix this?

This is likely caused by an old node.js version being used. You can use the command “node -v” to find out the version. The server was tested and developed with node.js version 6.9! Versions with a leading zero e.g. 0.10.x won’t work.

The signaling server fails to listen on the standard ports 80 / 443 for ws/ wss

Usually, these ports require to use root access but using nodejs as root isn’t particular secure. Instead, you can use following command to give nodejs access to ports below 1024:

#if not installed yet: sudo apt-get install libcap2-bin

sudo setcap cap_net_bind_service=+ep `readlink -f \`which node\``What are those ssl.key / ssl.crt files coming with the signaling server / How to use secure connections?

They contain the SSL certificate needed for the encrypted websocket connections (wss). These files will work for with the native version only (because it doesn’t check the validity of the certificate yet). It will not work with browsers. You will have to make sure you get a valid certificate and replace the files yourself. One way to get a free certificate is https://letsencrypt.org/.

Why can’t I connect (via the internet)?

For this question there is sadly no easy answer. There are many reasons why connections can fail and the network protocols won’t return any information except “I can’t connect”.

So far most common problems are:

- Firewall blocks access. Either local or one in a router

- Router/NAT doesn’t support or allow opening incoming ports and no TURN server is used

- STUN / TURN URL’s are wrong

- NetworkConfig is wrong, was never set up or was set up after the Call was already created

If you can’t quickly identify the problem then please try the following steps:

1. Create an empty project with the newest version of the Asset.

Ideally, use only the Unity Editor on Windows 64 bit. (If Android is needed make sure you set up AndroidManifest.xml!)

Open the example scene. Either callscene (CallApp) for the audio/video version or chatscene (ChatApp) for the network version.

2. Start the example app on your test system to connect locally.

The scenes contain two instances of the same app. Enter a test address/room name e.g. test123 and press join (CallApp) or Open Room (ChatApp).

You should now see “Waiting for incoming call address: test123″(CallApp) or “Server started. Address: test123” in the text area or log.

If this doesn’t happen / you see an error: This means the connection to the signaling server failed. This server is responsible to keep track of addresses and relays connection information to then later establish a direct connection)

This could mean the following:

- Firewall blocked access to server

- You are connected to the internet via a proxy (which isn’t supported)

- The internet connection is dead or buggy

- try “ping google.com”. If you see errors / dropped packages or a high value for time (e.g. above 400ms) then something is wrong with your internet connect (or you are in China).

- The signaling test server is dead

- try “ping because-why-not.com”. If you see a high latency here but low latency with google the test server might be updating or dying. If the problem persists after a few hours send an email via (BecauseWhyNotHelp@gmail.com)

- Try it with a different internet connection. If the problem persists and you excluded all other problems: send an email.

If it worked close the app and test it on your second system using the same internet connection but a different address / room name. If it fails only on one system is very likely a local problem e.g. a local firewall.

3. Connect two systems that are in the same LAN / Wifi

If this doesn’t work but everything in step 2 worked then it could be due to local network restrictions e.g. Schools and Universities often block direct connections between systems. If you can exclude these problems then please send an email.

4. Connect two systems via internet

Please make sure to read “What servers do I need and why do I need them?” to find out what servers are involved and what they are for.

If all steps before worked but this one fails it could be due to the following reasons:

- The STUN/TURN server is offline. This server is suppose to open the port of the router to allow incoming connections. You can find the server URL via the Unity Inspector if you select the Chat/CallApp GameObject (value uIceServer and uIceServer2). Usually something like this: “stun:stun.because-why-not.com:443″ or turn:turn.because-why-not.com:443” or “stun:stun.l.google.com:19302” (free google server, doesn’t work in China). For information how to check if the server works see “How to set up my own STUN / TURN server?”.

- Both involved routers / NAT’s don’t allow opening incoming ports automatically. In this case two users can’t connect directly but both might still be able to connect to other users that have a different router. You might need a TURN server to avoid this problem. Please send an e-mail with your Unity Asset Store invoice number if you need a TURN server for testing purposes. Username and password of this server will change regularly to avoid abuse / too much traffic.

- A Firewall blocks a wide range of ports / blocks custom protocols: This often happens in big organizations, universities and schools. If only UDP is blocked you can force TCP via turn by changing the URL to “turn:turn.because-why-not.com:443?transport=tcp”. Using TCP and port 443 will make the connection appear like HTTPS to a firewall. As this forces TCP make sure to also add the same URL without “transport=tcp” to ensure STUN and UDP TURN is still available. This can be done by using the C# API directly via NetworkConfig.IceServers instead of using the Unity Editor UI.

- In some rare cases the use of VPN can also help avoiding a firewall

How can I use the asset with other network libraries e.g. UNET or photon?

It isn’t possible to route the assets network communication via another network connection but you can still use it in combination with other network solutions. Here is a typical scenario for using the asset together with UNET:

- Multiple users are connected via an existing UNET connection as part of an application. User A and User B both agreed to join an audio chat via the applications UI / application specific logic

- Both applications will setup a call via the ICall API as shown in the example applications

- The app for User A will now create a random token which will be used to connect yo User B

- User A will now start listening for an incoming audio connection by using ICall.Listen(“random token”)

- User A will send the random token to User B via UNET (only to User B so no one else can connect)

- User B receives the token and will now use WebRTC Video Chat to connect to user A (API call ICall.Call(“random number”))

- Both user A and user B will now receive an event indicating that the connection is established and they will be able to communicate

How to play ringtone before establishing a call? or: How to exchange information before a call?

By default the ICall interface will establish the connection immediately after one user calls the other. This isn’t always ideal as many scenarios will require the exchange of information before this is happening e.g.

- Playing a ringtone / allowing the user to actively accept a call

- Exchanging meta information about the user (such as username, user state, capabilities, e-mail, …)

- Login information or a token for authentication

- Measuring latency before establishing a connection

- Creating a room based system and checking if there is still space in the room

As there are so many different scenarios it is difficult to provide a general solution that works for all. Instead the system is built to be easily wrapped by another system. This system can either be a custom server application or another ICall / IBasicNetwork interface.

Here is an example how you could use another ICall or IBasicNetwork interface to play a ring tone first:

- User A creates an ICall object with video / audio set to false (or IBasicNetwork interface), listens for incoming connections and shares the address with other users

- User B connects to user A

- User B sends a request to establish an audio / video call. (using ICall.Send* or IBasicNetwork.Send* methods)

- User A hears the ringtone and can now accept the call

- After accepting the call User A will create a second ICall interface and wait for an incoming connection using a randomly generated token

- User A answers the request of step 3. by sending the token via the existing connection to user B

- User B connects to User A

This example can be changed to fit any scenario by simply changing the request / answer messages used in Step 3 and 6.

Can I run the signaling server on one of the clients?

Yes, this is possible if all clients are within the same LAN. In this case no ICE server is needed.

How do I run the signaling server on my webserver / apache / php webserver?

If your hosting provider supports node.js you might be able to run it. Some providers require you to use a specific port or replace the port with an environment variable so it can be set by your provider. If only apache or php is supported you won’t be able to run the signaling server at all.

Please note that I can’t help with provider specific issues.

Can I use WebRTC Video Chat to broadcast my game screen / window?

Currently, only connected webcams are supported as video source! Due to multiple emails asking for this feature I decided to add custom video sources in the future. This will allow you to deliver any kind of image(s) / textures to the Video Chat plugin.

Update V 0.981: You can now find an example for this in the folder extra/VideoInput. The Latency will likely be too bad for realtime interaction though.

Also have a look at https://unity3d.com/legal/terms-of-service/software – 2.4 Streaming and Cloud Gaming Restrictions.

How to send larger amounts of data or a whole file?

Short answer:

Use bool ICall.Send(byte[] data, bool reliable, ConnectionId id) or INetwork.Send with chunks of data up to 8KB. Consider using a separated ICall / INetwork object for large data transfers if you also use audio and video at the same time. This way you can reduce the risk of a high volume data transfer blocking internal control messages.

long answer:

- Split your messages into 8 KB chunks / byte[] (16kb – 1 byte is theoretical max but you are on the safe side for further changes if you use 8 KB)

- Call send repeatedly with the data you want to send until it returns false. This means the last message hasn’t been sent in order to prevent a buffer overflow

- Retry sending after a little break of a few frames to give the network time to actually empty its buffers and send the data via the network

- Reassemble on the other side.

For V0.981 and before:

These versions don’t have a way to prevent buffer overflows yet which will trigger WebRTC to close the data channel and refuse to send any further messages. To avoid this you should only send messages up to 1MB and then wait for the remote side to send a confirmation after the data is received. After that you can send the next 1 MB (still in 8KB chunks!).

What is the maximum resolution, FPS?

This all depends entirely on your device and the native WebRTC implementation. I recommend allowing the user to decide this so they can optimize it for their system. Not every device can handle the realtime encoding and decoding of full HD streams even if it has a HD camera connected to it. I consider 320 x 240 and 640 x 480 reasonable fast to use as default value. Have a look at the documentation of the class MediaConfig to see how to configure the resolution and fps.

Conferences / multiple users in a single video chat?

This is only an experimental feature that was added for free. The current example in extra/ConferenceApp can’t handle unexpected disconnects and is not suitable for use in production.

It is possible to create more reliable conference applications by using IMediaNetwork instead of ICall giving each user their own address. It will remain a feature only for experimental use though.

How many users can I have in a single conference call?

There is no clear limit to this. You can connect as many users as you want to but that doesn’t mean their system and network connection is able to handle it. The asset will fundamentally work on a peer to peer basis. A conference call between 10 users means that each users need to download & decode 9 video and audio streams while also uploading & encoding 9 video and audio streams. This can work with a low resolution but will likely fail with 1080p even on modern systems. Also note that WebRTC will attempt to reduce the quality if it detects a network or CPU overload.

In the end the only way to determine the maximum number is to test it with your specific setup and use case. For this reason all sample application in the asset support being duplicated multiple times in a single scene and on a single system. This way you can easily simulate specific use cases without the need of 10 different devices.

How to set the resolution?

You can have a look at the CallApp.CreateMediaConfig. There you can set a minimal, maximal and ideal resolution for your use case. It isn’t possible to enforce a specific resolution though due to differences in browsers, operation systems and video devices.

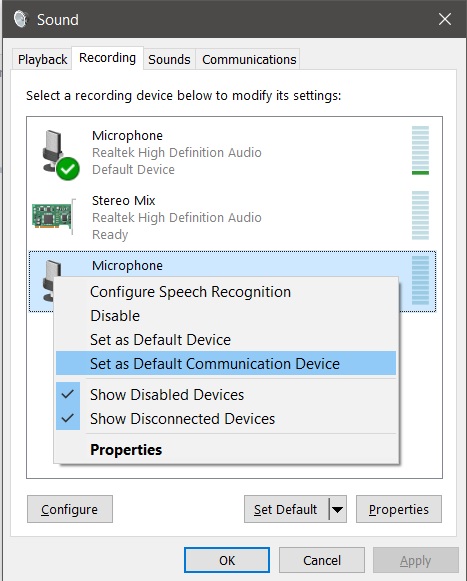

How do I change the microphone / speaker (Windows)?

The system uses the default microphone/speaker currently. In windows this is the device set as “Default Communication Device”. (Control Panel -> Sound -> Choose “Recording devices” for Microphone or “Playback devices” for speaker ->Right click on the device you want to use -> choose Default Communication Device)

Why is the video so grainy / pixelated?

The video is set by default to a low resolution. This is needed as some devices have very high quality cameras but not the CPU power to encode videos at such high quality. You can change the resolution in the CallApp.cs example via the method “CreateDefaultConfig” mediaConfig.IdealWidth / mediaConfig.IdeaHeight.

What codecs are used?

Encoding, Decoding and formats are all determined by the WebRTC implementation. For native platforms the native WebRTC library from webrtc.org is being used. This version will default to VP8 video encoding and opus for audio. H264 might be supported in rare cases (e.g.iOS to iOS connections). No software encoder or decoder for h264 is included at the moment to avoid related licensing / patent issues. For WebGL the codecs available depend entirely on the browser and browser version. Please check with the browsers documentation.

What image format is used?

All used video codecs use i420p as an image format internally. This means alpha / transparency isn’t supported and chroma resolution is half of the image resolution.

Can I send data / stream between different platforms?

Yes, all platforms supported can connect to each other.

Can I connect the asset to another WebRTC application / library?

No, this is currently not possible. WebRTC doesn’t standardize how a connection is established thus different WebRTC applications are usually not compatible to each other. A future version might allow connecting to other WebRTC based systems but support for this feature will be limited.

Can I stream the unity screen?

In V0.982 and later a new API called NativeVideoInput allows users to send custom images using formats that are natively supported by WebRTC such as ARGB or i420p. You can find an example for this in the folder “extra/videoinput”. It will send a part of the screen by using Unity’s Camera object and the RenderTexture class. In real life scenarios it is usually best to send images in formats such as ARGB (alpha channel will be ignored) or i420p directly to conserve CPU time.

The API is considered an “extra” and is subject to limited support. Advanced programming skills are required. Note that WebGL and UWP builds can’t access this API.

Can I send files?

This functionality isn’t part of the API yet but you can always send byte[] of it yourself using WebRTC Network.

Where is the AudioSource / How can I access the raw PCM data?

There is no AudioSource and raw PCM data access isn’t supported. The native WebRTC Version / the browser will replay the audio automatically while receiving a stream without any interaction with the assets or C# Unity side code.

How can I record audio?

This is not possible. The only exception is using loopback devices / platform specific software that is able to record the audio the system replays.

How can I send audio from other audio sources?

This is not supported.

How to I switch cameras during the call?

This isn’t possible yet due to restrictions in the C++ wrapper. It is planed to change this in the future though.

It is possible to have two active ICall instances though. One can send audio and data and the other can be used for video. This way video can be cut if not needed.

The ConnectionId is not unique. How can I get a global ID for each user?

ConnectionId’s take over the same role as a local port in UDP / TCP. They are only useful for that single instance of ICall / IMediaNetwork / IBasicNetwork. So if you sent it across to another user it loses its meaning because that user will have a different ConnectionId for each user / each local connection.

The most common way to handle this is by having an external system that is accessed via a REST API to check username / password and give out global ID’s and the ID’s of users you can connect to.

A decentralised version that doesn’t rely on an external system could look the following:

- Each user can locally create their own unique ID by using Guid.NewGuid() (and store it for later if needed)

- If that user receives or creates a new connection send your own message over that says: “Hi I am 0f8fad5b-d9cb-469f-a165-70867728950e”

- The other side will now receive the GUID and via the event handler / message system the local ID it came from

- Use a Dictionary to store the GUID for each local ConnectionID

If now two users connect to each other they will automatically share their globally unique ID and also receive the Global ID / Connection ID combination to address that remote user. A user could even use their own GUID as an address to allow new incoming connections for other users that aren’t connected yet but might have received the GUID via other means.

Note that this system has some security implications as a user could easily steal the identity of another user by using their GUID.

Platform specific issues

WebGL

HTTPS / WSS problems

To connect two peers websockets are used to exchange connection information. Some problems can arise due to restrictions imposed by browsers on websockets. To avoid them ideally host your unity application on https and your signaling server on wss using the same domain and the same certificate.

Problems you might run into:

- Browsers might refuse access to WebRTC if you use insecure connections. Especially combining https hosting and using a not secure websocket will not work everywhere!

- Self signed certificates might not work depending on your browser and configuration! If you do use them make sure you visit the page via https:// first to accept the certificate and then start your webgl app,

- Firefox (and maybe others) don’t seem to automatically accept certificates if they are needed for Websockets only but not for https. For example if you test your webgl application locally but using the default signaling server configuration on because-why-not.com then Firefox might refuse to connect. The connection will work after visiting https://because-why-not.com once, thus allowing firefox to accept and store the certificate. The problem might reoccur after my server updates its certificate(happens every few months)!

The UI is all black

This is a unity bug. Close unity, delete the library folder and then start again.

Windows

Unity Editor freezes while connecting / stopping the editor while connecting

Using ssl (due to the wss websocket connection for the signaling server) can cause unity to freeze. This is a bug in unity / mono. It is announced to be fixed in version 5.3.7. Until then I added a workaround that should reduce freezing to 5-20 seconds max instead of the usual 5 minutes. This shouldn’t happen anymore in more recent versions. If it does happen please create a test project and send an error report.

Mobile devices

iOS and Android mobile phones are designed with microphone and speaker being very close together. This isn’t a problem as usually you hold the device onto your ear during a phone call, thus the volume of the speaker can be very low. This system doesn’t work out with high volume. The microphone would pick up the sound of the speaker causing a strong echo. Due to this problem phones will automatically turn down the volume / or switch to another speaker if a phone or VoIP call is active.

To increase the volume these devices have a Loudspeaker mode (usually visible as a speaker button during regular phone calls). It allows replaying the audio in high volume and then filters the incoming signal to remove the audio it replayed. It might also change the speaker in use entirely. This is quite complicated technology and often reduces the audio quality. It depends a lot on your specific device.

Also keep in mind that the device manufactures do not expect the user to be in a phone call and play music / sound effects at the same time! Some devices might have problems with this.

Android

Why can’t I connect on Android? Why is audio / video failing on Android?

A common problem for Android users is a missing AndroidManifest.xml file or it is simply placed in the wrong location. This file is project specific and can’t be supplied/ placed by the Asset. It is needed to give your application the rights to access internet, video and audio. All needed for WebRTC to run properly. Please check the readme.txt for how to set up the project for Android and what permissions need to be requested for the asset to work. There is also an example file in WebRtcNetwork\Plugins\android\AndroidManifest.xml.

The related unity documentation can be found here. Please make sure to read the documentation and place your file at the exact location: Assets/Plugins/Android/AndroidManifest.xml !

You can ensure your properties are set correctly in the final apk via the command “%YOUR_ANDROID_SDK%/build-tools\23.0.2\aapt.exe l -a your_android_app.apk”

Why can’t I hear the other side speaking on Android / why is the audio so quite?

WebRTC Android doesn’t treat the audio received from the other peer as “Media”. Instead it will treat it like a phone call. It will automatically set the volume to a level that is comfortable for someone who holds the phone directly onto the ear. The file AndroidHelper.cs (V0.975) contains multiple functions that can help you

- AndroidHelper.SetSpeakerOn(true) – Turns on the speaker of the phone.

- ICall.SetVolume – Android applications support a volume above 1 (up to 10).

- AndroidHelper.SetCommunication() – This switches Android in communication mode. The user can then use the audio buttons to change the call volume. (Called by default starting V0.983)

iOS

Error during XCode build of the Unity project or error during first access of the asset

Possible error messages you might get:

- Symbol not found: _SWIGRegisterExceptionCallbacks_WebRtcSwig

- Similar errors stating _SWIGExceptionHelper

- dyld: Library not loaded: @rpath/webrtccsharpwrap.framework/webrtccsharpwrap

These errors usually means the native iOS plugin of the asset (webrtccsharpwrap.framework) isn’t included into the build correctly or can’t be accessed / loaded. This can have several reasons:

- You are trying to run the asset on the emulator (the iOS emulator isn’t support at the moment as it runs on a different processor architecture)

- You might have renamed the asset folder or moved files. This can lead to wrong paths in the Xcode project. Make sure to leave the asset folder unchanged

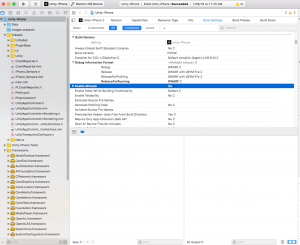

- The folder “Plugins/ios/universal/webrtccsharpwrap.framework” settings might have been changed or lost. If you select this folder in the project view (on the right side of the view!) make sure “iOS” is ticked in the Inspector tab. Make sure to never move any files outside of the Unity Editor as this will lead to the settings getting lost

- The asset uses the file Editor/IosPostBuild.cs to configure your Xcode project for you. In some cases this might fail (e.g. for Unity 2017.2 and earlier). See manual Xcode project setup below to find out how to set up the project yourself

- There is a known problem in the Unity versions starting with 2017. If you build for iOS you must select iOS and press “Switch platform” first before pressing “Build&Run”. Otherwise Unity won’t run “IosPostBuild.cs” to configure the xcode project for you.

See the answer below for how to manually configure a Xcode project if the automatic method fails. This might need to be done repeatedly!

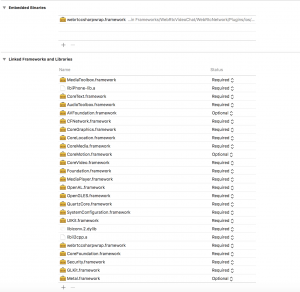

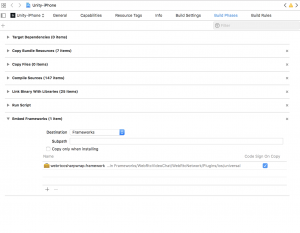

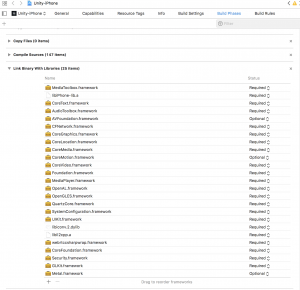

How to set up the iOS Xcode project?

Add the file webrtccsharpwrap.framework as embedded framework

Make sure it is added as “Linked Binary With Libraries”

Set Enable Bitcode to No

NOTE: Newer Unity versions generate two separate Projects: “Unity-iPhone” and “UnityFramework”. iPhone-Project must embed the binaries but both “UnityFramework” and “IPhone-Project” should have bitcode turned off.